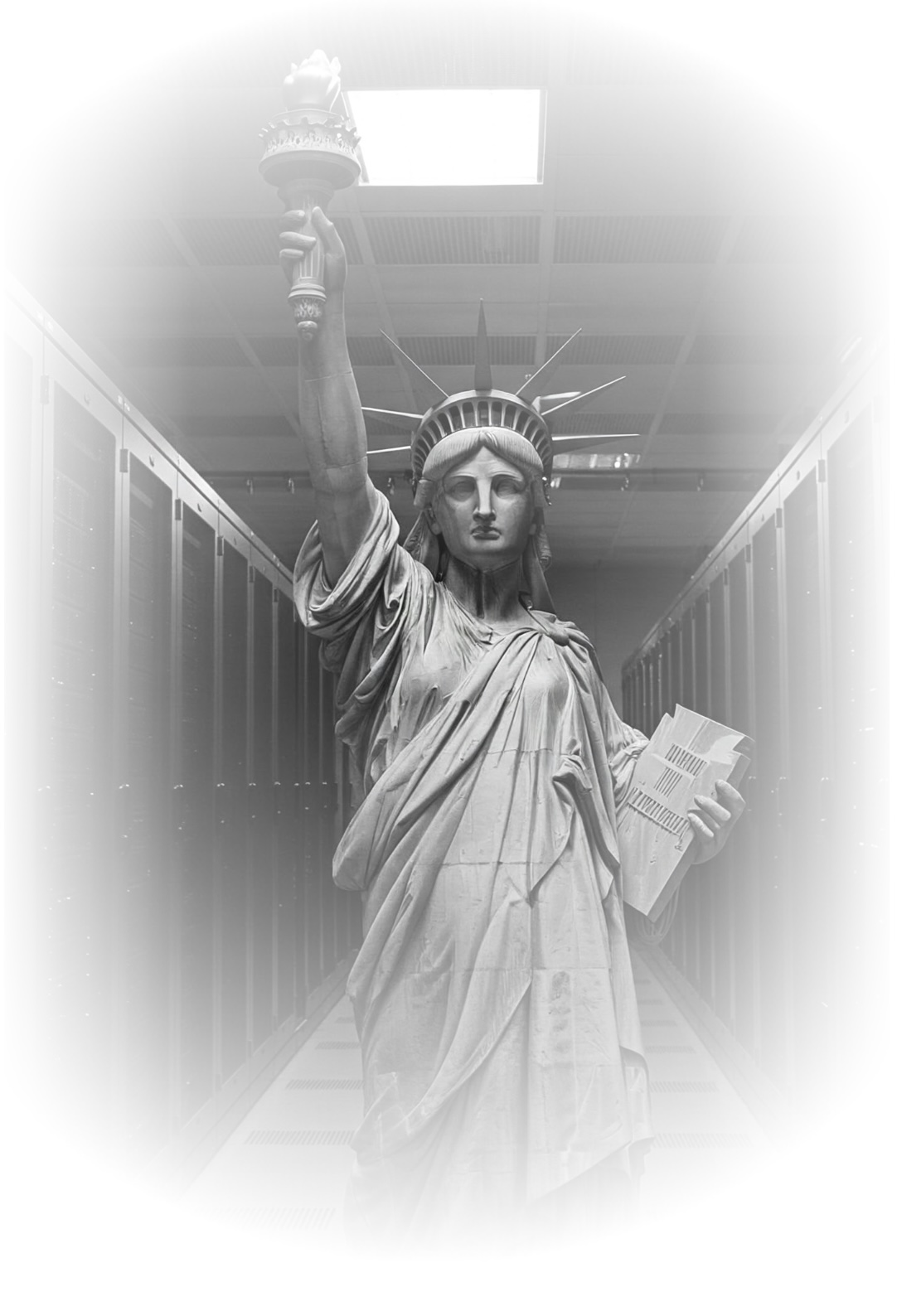

America’s Superintelligence Project

Executive Summary

Private labs in the United States believe they’re about to develop artificial superintelligence (ASI), a technology that could provide an unmatched strategic advantage to whoever builds and controls[1] it. They could be right. But as it stands, frontier AI research is vulnerable to espionage and sabotage, particularly from the CCP. All of our frontier labs are almost certainly CCP-penetrated.[2] The national security stakes of superintelligence are too high to let this status quo continue.

That’s why there have been growing calls for deeper government involvement in securing frontier AI research – up to and including a formal recommendation from the U.S.-China Economic and Security Review Commission to establish a “Manhattan Project for AGI”. The prospect of a Manhattan Project for superintelligence has been widely discussed in the Silicon Valley AI scene for years, and hit the mainstream when former OpenAI researcher Leopold Aschenbrenner published his influential manifesto in June 2024.

But setting up and securing a national superintelligence project wouldn’t be easy. We’ve spent the last 12 months figuring out how it could be done, what it would take for it to succeed, and how it could fail. We interviewed over 100 domain specialists, including Tier 1 special forces operators, intelligence professionals, researchers and executives at the world’s top AI labs, former cyberoperatives with experience running nation-state campaigns, constitutional lawyers, executives of data center design and construction companies, and experts in U.S. export control policy, among others. Our investigation exposed severe vulnerabilities and critical open problems in the security and management of frontier AI that must be solved – whether or not we launch a national project – for America to establish and maintain a lasting advantage over the CCP. These challenges include:

- Supply chain security. The U.S. supply chain for critical AI infrastructure is highly exposed to adversary espionage and denial operations. We rely on Chinese manufacturing for key data center components, many of which can be compromised for surveillance or sabotage. Onshoring these supply chains will take years – time we may not have. The problem extends to AI chip fabrication and packaging, which is largely done in Taiwan. A national superintelligence project built with existing supply chain components would risk embedding CCP trojan horses deep into the data centers that house some of the most national security-critical technology America will ever build. This isn’t a reason not to proceed; there are mitigations, but they come with hard trade-offs.

- Model developer security. The "move fast and break things" ethos of Silicon Valley is incompatible with the security demands of superintelligence. Frontier lab security is far from where it needs to be to counter prioritized attacks by America’s nation-state adversaries. Insider threats are a big problem: a large fraction of frontier researchers in American labs are Chinese nationals, and the CCP is known to leverage individuals with family, financial, and other ties to mainland China – including U.S. citizens – as intelligence sources. There are also major cyber and physical security gaps that would allow a competent adversary to easily obtain sensitive IP, and compromise a national project.

- Loss of control. A key objective of a national ASI project must be to develop AI-powered denial capabilities – *** * *** *** * ** *** *** *** ** * – that would allow the United States to stabilize the geostrategic environment **** ** *** **** ***** *** **** **** **** * ** ** ***** *. But an AI-powered denial capability is useless if it behaves unpredictably, and if it executes on its instructions in ways that have undesired, high-consequence side-effects. A national superintelligence project would need to actively assess the promise of our best AI control techniques, and to account for that information when making decisions about which capabilities to develop, and when. But that needs to be balanced with an imperative for speed: the CCP has already announced significant infrastructure investments on the order of a quarter trillion dollars in PPP terms that could be viewed as a first decisive move towards a nationally coordinated ASI strategy. Chinese AI labs are genuine contenders, even absent espionage. The race to superintelligence is already on.

- Oversight. Superintelligence would concentrate power in unprecedented ways. Traditional checks and balances weren't designed for this. A national project needs robust oversight to prevent misuse, balancing speed with security while ensuring democratic accountability. We need mechanisms, analogous to the nuclear chain of command, to ensure no single person or group can wield this technology unchecked. And we’ll need an economic “offramp” for the technology: a path to ultimately put superintelligence-derived capabilities into the hands of everyday Americans without compromising national security, to support economic growth and empower individuals with the technology.

These challenges create a dilemma. We're not pricing in the military implications of superintelligence, making it hard to justify investing in security now. But security can't be bolted on later. Lead times for essential infrastructure are measured in years. Chip designs can't be reworked overnight. We must either make a bold bet on securing critical AI infrastructure now or find ourselves in a late-stage race to superintelligence with CCP-compromised hardware and software stacks, developed by labs unable, for cultural and economic reasons, to embrace the security culture this technology demands – and potentially without even the ability to control it.

A project like this needs a new data center build, it needs security baked in from the ground up, and it needs to be integrated from the start into an offensive counterintelligence motion that disrupts similar projects by adversaries. As new capabilities come online that can buy America time, they need to be quickly and efficiently folded into offensive activities, while intelligence we collect from adversaries needs to inform the kinds of capabilities we seek to develop in real-time.

The window to act is closing fast. Many in Silicon Valley believe we're less than a year away from AI that can automate most software engineering work. Our interviews with current and former frontier lab employees strongly suggest that belief is well-founded and genuine. If they're right, we may need to break ground on a fully secure, gigawatt-scale AI data center within months, and figure out how deal with contingencies. There's no guarantee we'll reach superintelligence soon. But if we do, and we want to prevent the CCP from stealing or crippling it, we need to start building the secure facilities for it yesterday.

Our report offers detailed recommendations, including specific actions that America can take immediately, even without a full national project. Implementing these recommendations will be a big task. It will require unprecedented coordination between government, industry, and the national security community. It will be expensive, disruptive, and politically fraught. But the alternative – a world in which the CCP builds superintelligence first, or in which we can’t effectively control our own – is far worse.

Introduction

America has a historic opportunity to build, secure, and control a world-defining technology:[3] artificial superintelligence (ASI). The right kind of superintelligence project could give the United States a decisive – possibly permanent – advantage over our adversaries, and deliver unprecedented economic benefits globally. But right now, we aren’t building the right kind of superintelligence project. Research on the critical path to ASI is happening in badly secured private labs that are easy targets for CCP penetration, is not being strongly integrated with national security efforts, and is cutting corners on the critical technical problem of controlling these systems. All this can be fixed, and it will have to be.

From 2022 to early 2024, our team conducted an investigation of the national security implications of frontier AI development. We shared the first-ever publicly reported statements from frontier lab whistleblowers who were concerned that their labs’ security posture was putting U.S. national security at risk. According to lab insiders, leading AI model weights – which are increasingly key U.S. national security assets – were being regularly stolen by nation- state actors. Critical lab IP was protected by flimsy security measures. And executives at some top labs were shutting down calls for heightened security coming from their own concerned researchers. In one case, a researcher told us about a running joke within their lab that they’re “the leading Chinese AI lab because probably all of our [stuff] is being spied on.”[4]

A few months later, OpenAI fired Leopold Aschenbrenner – a researcher who had written an internal memo criticizing what he considered OpenAI’s poor security – allegedly for leaking sensitive information. *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** *** ***** * ***** * ** *** ***** *** ****** According to one former researcher, “The government doesn’t really know what’s going on in the labs, and they’re not even allowed to talk to people at the labs [...] Labs are trying to crack down on [technical researchers] that they suspect of potentially talking to the government.”

As a result, the U.S. government lacks situational awareness regarding the capabilities of frontier models trained on American soil – increasingly critical national security assets. And unlike the U.S. government and its intelligence agencies, the CCP is free to spy on American companies: at any given time, the CCP may have a better idea of what OpenAI’s frontier advances look like than the U.S. government does.

Most U.S. frontier labs have improved their security measures somewhat since we published our report. But as we’ll see, these improvements are not enough to keep them ahead of current or future CCP operations.

Soon after his departure from OpenAI, Aschenbrenner published Situational Awareness, an influential manifesto that argued that the current explosive growth in AI capabilities, measured on a scale of “effective compute,” suggests that superintelligence may very well be developed before the end of the decade.

AI scaling curves show that as AI models have been trained using ever larger quantities of compute and data, their capabilities have increased steadily and predictably across many orders of magnitude. As AI system training and inference is scaled on larger and larger superclusters, it’s likely that the capabilities of frontier systems will continue to increase.

Those capability increases could happen surprisingly fast: AI is already augmenting important parts of the AI research process itself, and that will only accelerate.[5] Soon, AI research will be mostly or entirely automated, with millions of AI agents pushing the field forward at computer speeds rather than human speeds, and getting faster with every exponential increase in computing power. On the way there, we might get warning shots – if we’re lucky, shocking demos; if we’re unlucky, high-consequence attacks or accidents – that will fully awaken national security agencies to the stakes of superintelligence. At that point, Aschenbrenner argued, everything will change. Proposals that seem outrageous today will slide across the Resolute Desk — from locking down the labs to outright nationalization of ASI research.

Leopold isn’t alone in predicting that we’re on course for superintelligence, or that more government involvement in AI is inevitable. Our team has spent the last four years working closely with a network of insiders at top AI labs and U.S. national security agencies to understand what the march to ASI will actually look like — and what it will call upon us to do, if we want the U.S. to come out ahead of the CCP, and the cause of liberty to win through. In that time, the views of many of our contacts in the AI labs have sharpened: as we approach superintelligence, it’s become clearer to them that AI is turning into a national security technology first and foremost. To quote a former cyber intelligence operator we interviewed during our investigation, “Dual-use is a nice way to [describe superintelligence], but actually it’ll be a weapon for all intents and purposes.”

A small number of people in the frontier AI world saw this coming, and they weren’t just employees at leading labs. As early as 2020, a technical background and some discussions with the right friends at OpenAI were enough to convince us to dive head-first into AI national security. Even then, it was clear that we’d end up with an industry-wide race to build AI infrastructure, and that the national security implications of AI development were soon going to be impossible to ignore.

To this day, if you know the right people, the Silicon Valley gossip mill is a surprisingly reliable source of information if you want to anticipate the next beat in frontier AI – and that’s a problem. You can’t have your most critical national security technology built in labs that are almost certainly CCP-penetrated, some of which have a history of shutting down whistleblowers with security concerns. As a former DIU executive told us, “if legislators knew how compromised [redacted AI lab] might be, there would be a significant appetite to shut this all down.”[6] Despite deepening partnerships with national security agencies, frontier AI development is leaky: most lab executives aren’t pricing in how effective nation-state espionage can be.[7] Shoring up lab security to the point where we aren’t giving away the crown jewels of American technological superiority to the CCP will require fundamental changes to the culture, management, and operations of frontier AI labs.

Right now, the greatest danger is not that the U.S. will fall behind China in the race to superintelligence. Until we’ve secured the labs, there is no lead for us to lose. Just the opposite: as we’ve seen, U.S. national security agencies don’t constitutionally spy on American companies or access their technology illicitly, but the CCP has no such scruples. Under the status quo, therefore, advances at private U.S. labs may lead to advances in CCP capabilities before they lead to advances in U.S. national security capabilities.[8]

Apart from security, there’s also the question of what chain of command should apply to future superintelligent systems. We’re already seeing AI models with meaningful bioweapon design, cyber, autonomy, and even persuasion capabilities. Superintelligence will go beyond that: it will be the national security technology. It will deliver a decisive, and possibly permanent strategic advantage to whoever builds and controls it first – and it may not be easy to control. It must be built by, secured in, and controlled from America. And it must be operated in a way that serves the interests of the American people.

If we end up launching a national project, the need for that project will be recognized abruptly, and when it is, we’ll wish we’d had more time to think through how to pull it off. For the past year, we’ve been working to answer exactly that question: how might a U.S. government- backed superintelligence project work? We interviewed dozens of frontier AI researchers, energy specialists, AI hardware engineers, national security executives and action officers, and constitutional lawyers. We visited hyperscaler data centers with former Tier 1 special forces operators to assess the state of the art of data center security. We spoke to dozens of Congressional offices to understand where the Overton window is today, and how far it will have to move to catch up to the decade of superintelligence.

This might seem premature. But if progress simply continues on its current path, we could suddenly find ourselves in a technological and geostrategic crisis – maybe even a hot war – over AI. And if that happens, we’ll wish we’d had more time[9] to anticipate contingencies, figure out how to design a CCP-proof project, find out whether it can do what we want it to do – and decide how to run it.

Scope of the investigation

AI is already a dual-use technology. But as we get closer to superintelligence, it will be seen more and more as an enabler and driver of weapon of mass destruction (WMD) capabilities, if not as a WMD in and of itself. Direct calls for a “Manhattan Project for AGI” are already starting.

But it’s still not obvious how exactly the U.S. government could or should partner with frontier AI labs on a superintelligence program. Should frontier labs be nationalized outright?[10] Should they be put on contract to support U.S. government research activities? Or should the relationship between the government and the labs be at arm’s length, with the government offering incentives – such as funding or infrastructure – to shape frontier AI research?

There’s already been some informed speculation about the answers to these questions, but our goal here isn’t to get bogged down in debates about the specific configuration of a government-backed superintelligence project. Instead, we’re going to focus on identifying the implementation-level problems that a public-private partnership on superintelligence would have to solve in order for it to achieve critical U.S. national security objectives.

There are many ways that a government-backed ASI project could be set up, but few that will lead to a lasting American advantage without inadvertently handing our most important national security technologies to our adversaries. We’ll be going after a very small target while wielding a very large industrial and national security machine.

We’ll start by introducing some of the main considerations that our investigation has surfaced, sketching out a path to a U.S.-backed superintelligence program.

Data center security

When you tour a HPC AI data center with special forces operators, they ask some weird questions. Who manufactures the fire alarms? ** **** * *** *** ****** ** * ** ** ** **** * *** *** ****** ** * ** ** ** **** * *** *** ****** ** * ** ** Where were the critical mineral inputs to the AI accelerators processed? What construction company was contracted to build the facility? The tactics, techniques, and procedures (TTPs) actually available to and employed by our nation-state adversaries are more advanced than hyperscalers, private labs, chip design firms, and data center infrastructure providers can know. That will have to change if a U.S. government superintelligence project is going to succeed.

With a ******, what you do is you get **** * ***, and you knock out the whole [data center component] with **** * ***. You just rip it across the [component] and fucking take it out.[11]

To train and deploy frontier AI models, you need AI hardware to run computations and move data around, a reliable source of high base load power to keep your hardware running, and cooling systems to stop your hardware from overheating. If you want to truly secure a U.S.-backed superintelligence project, then you need to secure the supply chains for these assets – starting with the data centers themselves.

Data centers are where the critical supply chains for AI chips, power, and cooling come together. They’re the facilities in which a U.S.-backed superintelligence project will actually train and run its models. Because of their size and complexity, data centers have lots of human, physical, and cyber security access points and vulnerabilities. Nation states can easily exploit those vulnerabilities to extract sensitive IP or to damage key facilities, in ways that aren’t addressed by any known current or proposed future security measures.

In November 2024, we joined a team of former Tier 1 special forces operators and national security intelligence professionals and were given access to a ** ** * data center containing hyperscaler AI clusters, in order to assess its security posture. We later also spoke with individuals directly responsible for the security of hyperscaler-owned and operated AI data centers.

Our goal was to understand the current state of data center security, to determine how it needs to be improved to resist nation-state attacks, and whether we can expect those improvements to happen in time for ASI-grade training runs if they were executed in the 2026-28 era. If we take seriously the prospect that superintelligence will be a WMD and or WMI (weapon of mass influence), then these data centers will become priority targets for the intelligence and special operations of nation-state adversaries and their covert surrogate networks. So in order to understand how to secure a superintelligence project, we need to talk to intelligence and special forces operators directly.

Physical security

Today, security practices vary widely between AI data centers operated by hyperscalers[12] and colocation providers, but some trends are clear. A given data center tends to have a highly uneven security posture across different threat vectors. For example, it’s often hard for employees or visitors to take photographs of data halls, but it would be easy to ** * **** ****** ** * **** **** *** * ** * ** ****** ** * ******* *** * **** ** ** ****** ** ** * *****. This inconsistent security profile is a problem. Even the most secure windows in the world don’t matter if you leave the front door open.

By the assessment of one special forces operator with direct firsthand experience running *** * ******* ** * ***** ** *** **** **** * operations in front-line environments, a strike capable of destroying a key component of a hyperscaler data center infrastructure “could be done with *** ** *** for under 20k [dollars]. Pending you had all the necessary component parts to ***** ***** ** ***** ***** *** ** * **** ***.” When we asked the data center’s security lead how long it would take for them to get the facility back online after a strike like this, his reply was “A year? Six months at least. ***** **** ******** *** ** ** **** ***** * * ****** ***** *** * ***** ***** *** **** * ** ** *** **** ** * ** ** *** ******* ** *** * * *****”.[13]

That’s a $2B facility out of commission for over 6 months, on a budget of $20-30k. All from a threat that’s been completely overlooked by every widely referenced assessment we’re aware of. Fortunately, it’s also a vulnerability that’s easily fixed: according to one special forces operator, the lowest-cost ** * *** *** variants of this attack could be blocked by simple and cheap add-ons.

But this also wasn’t the only critical *** ***** *** * vulnerability the team identified. Many critical components of data centers are on multi-year back-order and are highly exposed even in the most secure builds. As one operator explained, “With a ******, what you do is you get **** * ***, and you knock out the whole [data center component] with **** * ***. You just rip it across the [component] and fucking take it out.” We’ve been asked not to share the specific components or techniques involved in these attacks precisely because of how significant these vulnerabilities are, and how cheaply they can be exploited.

These low-cost, highly leveraged and disruptive attacks could easily prove decisive in the context of a U.S.-government backed project designed and understood to be aimed at developing weaponizable superintelligence, among other capabilities. In particular, if we find ourselves in a race to superintelligence with the CCP, a cheap attack on an unprotected data center could set our efforts back by months or even years depending on supply chain delays.[14] And although special authorities could considerably reduce wait times for key components, significant downtime for core data center infrastructure could still be decisive in an adversarial race.

Recommendation: At the design stage, new data center projects that are aiming to support training and inference for frontier models by the end of 2025 should enlist support from individuals with direct experience performing nation-state denial operations, including but not limited to operations involving *** * *****. These should specifically include red teaming, pentesting, and tabletop exercises supported by current and former special operators to address vulnerabilities introduced by new platforms as they come online during design and construction. This activity should be coordinated with the U.S. government, and involve the development of a variant of the **** ** * *** *** * ** *** *** *** ** * **** ** *** * ****** ** **** *** *** ** *** *** *** **** ** ** *** * * * *** **** * ** * **** ** ** ** ** * ** * ** **** ** ** ***** * *** * ** *** ** *** * **** ** *** ** ** ** ** ** ******** * *** ** **** *** ** *** ****** * *** **** **** **** *** * **** ** **** **** **** *** ***** * ** * ***** *** ** ** ****** ** ** ***** **** ** ***** **** ** * **** *** * *** **. We can provide further information upon request.

The IC and national security community will need to work with data center builders, hyperscalers, and frontier labs to define security measures that can withstand attacks by resourced nation state adversaries. That should include funding or incentivizing fast prototyping build-outs and retrofits of data centers and supporting infrastructure that can withstand those kinds of attacks. Some or all of these projects will probably fail to meet the full level of security they need. Funding for these prototype builds will have to be risk-tolerant the same way venture capital is: we can’t let fear of failure hold back critical experiments in data center security.

We can start with small-scale prototypes. For example, an individual GPU or a pod of 8 GPUs with simulated network fabric is something that can be set up very quickly. It can also be red teamed and pentested fast before it’s scaled up to a bigger build that fixes the security problems that get discovered in smaller-scale iterations.

Ultimately, perfect security is impossible, and we shouldn’t expect to be able to build an impenetrable fortress. But we should raise the bar for security to the point where our adversaries’ attempts to take down key frontier AI infrastructure are attributable, so that these attacks lead to a credible threat of retaliation.

Hardware supply chain security

According to the AI data center builders and operators we spoke to, the supply chains they’d need to draw on to provision secure physical data center infrastructure are badly strained. Those constraints will impact training runs and deployments of leading AI models in the 2026 era and beyond. Most at risk are components whose supply chains depend heavily on Chinese manufacturers, and which in some cases are produced exclusively by Chinese companies. To take just one example, the overwhelming majority of transformer substations contain components that were made in China and can be used as back-doors for sabotage operations.[15] Indeed back-door electronics are known to have been installed in Chinese-made transformers. If a superintelligence project were kick-started under nominal conditions, unsecured supply chains for AI hardware, as well as electrical and cooling infrastructure could embed physical CCP trojan horses deep into the data centers that house some of the most national security-critical technology America will ever build.[16]

What’s more, China announced a $275B investment in AI infrastructure[17] within days of the announcement of OpenAI’s Project Stargate. In this context, we’d be naive to expect that China is going to prioritize shipping critical data center components to U.S. AI labs over its own directly competing projects. Therefore we should expect that the lead times on China-sourced generators, transformers, and other critical data center components will start to lengthen mysteriously beyond what they already are today. This will be a sign that China is quietly diverting components to its own facilities, since after all, they control the industrial base that is making most of them.[18]

As one example of foreign supply chain dependence, a critical and underappreciated security problem for AI data centers is the supply chain for Baseboard Management Controllers (BMCs). BMCs are microcontrollers that sit on server motherboards and handle key housekeeping tasks, like monitoring temperature, voltage and power flows, detecting memory errors, and monitoring network connectivity. BMCs operate even when the main server they monitor is powered off, and they have a privileged position in the system architecture: among other things, they can directly interface with baseboard hardware, and they can read the firmware that links the baseboard hardware to the operating system. This means that attackers who gain control of the BMC can **** * ** *** **** ***** *** **** ** ** * ******* ** * ** **** ** * *** *** **** ** **** * * ** ** **** ** * *** ** **** ** ** * **** *** ** **** ** * *** *** *** * *** **** **** ** * ***** *** ** ***** ** * **** *** *** *** *** *** ***** *** **** *** * *** *.[19]

BMCs are a “soft underbelly” of AI server security – and a dream target for adversaries looking to Stuxnet some of our most critical national security assets. Which is why it’s especially bad that Taiwan-based ASPEED makes 70% of the world’s BMC chips.[20] ASPEED BMCs are all over the place, appearing in every Nvidia DGX server – and, while they’re modular in more recent Blackwell systems, they’re being widely deployed in hyperscaler AI data centers. We should expect ASPEED to already be a target for the CCP, and for their ASPEED infiltration, compromise, and sabotage operations to be increasingly prioritized as the stakes of superintelligence get more widely recognized. We need made-in-America alternatives.

Beyond BMCs, many of the less “sexy”, lower-tech but essential infrastructure components that make data centers hum are key vectors for adversary operations. Programmable logic controllers (PLCs) that power critical power and cooling systems, for example, have more diversified supply chains, but are also under-appreciated attack channels.

Reshoring critical supply chains to America or our allies won’t be a matter of months, but of years. We won’t have time to do it after we’ve come to a political consensus around securing a superintelligence project. But whether or not we ultimately choose to kick off a superintelligence project, if we want our infrastructure to be robust to even the most basic of these supply chain attacks, we need to start now.

There have been proposals to screen physical hardware that enters data centers as part of supply chain security. While this helps, it’s not adequate against a resourced attacker that controls major parts of the supply chain, as China does. We can't go into details, but we were informed during our investigation that there exist techniques for supply chain sabotage, including for destruction and surveillance, that are ****** *** *** * **** *** **** ** * **** ** ***** ***** * ******* * ***** **** * ***. As a former intelligence official informed us, the only guaranteed defense is full control of the supply chain from mineral extraction to final assembly and installation of every component. Of course, perfect security is impossible, and security standards have to be balanced against development velocity in the context of a technological race. But the intelligence official who shared this assessment with us was basing it on an overall evaluation of the risk profile of other forms of WMD development: if we’re going to treat superintelligence like the weapon that it will be, then that’s just the security bar we have to meet.

Recommendation: We need as much production capacity associated with critical data center components – like transformers, power supplies, cables, networking equipment, and cooling components – onshored as possible. We know from conversations with national security professionals that there are ways to doctor components in the supply chain for surveillance or sabotage that ****** *** ** **** ** ** ** ****** ** ** ***** *** ****** **. If adversaries are manufacturing most of our critical components, that’s a problem we need to solve immediately. One possible workaround is *** ***** ** ****** **** ***** ** * * * * ****** *** *** *** ** ** ** **** ***** **** *** ** * **** *** * **** **** * ** ***** *** **** * ** ******* ** * * ******** ***** **** * **** ** ***** * ******* **** ***** ***** **** * ** *** ***** *** *** *** * * **** *. We need to assess how feasible this would be for each critical component in the AI supply chain so that we can pull the trigger on this option if we determine that onshoring manufacturing of these components will take too long.

We also specifically need a securely sourced alternative to ASPEED BMCs — ideally, a simple, purpose-built and easily auditable drop-in replacement for existing chips. DARPA projects focused on developing secure BMC hardware could be a helpful step towards a solution, but government purchase guarantees could drive demand in tandem. To mitigate risk in the shorter term, *** * ** ** *** ** ** **** * ** ** * *** **** ** ** ** ** **** ** ** * *** * **** *** * *** *** **** ** *** *** **** *; insist on having suppliers share information about their sourcing of BMC chips; and introduce requirements for supplier diversity, including at the firmware level. **** *** ** ** *** *** ** *** * ** ** *** **** ** * * ** *** *** *** ** * * *** * *** * ** *** ** ** **** ** *** ** ** * ** *** **** ** *** ** *** **** ** ** * **** *** ** * * ** ****** ***** * *** *** *** *** *** *** ** *** ** ** ** ** *** *** *** *** *** *** *** * **** ** ** *** *** ** **** * **** *** ***** ** ** * *** **** ***.

These would be big — and expensive — moves, but they reflect the scale and impact of the vulnerability they’re meant to address. BMCs offer our adversaries a way to conduct crippling attacks on our most important compute infrastructure. We can share extensive further recommendations if desired.

Side-channel security

Adversaries will almost certainly aim to knock out the American data centers powering training runs for a superintelligence project. But to secure the project, we’ll need to do more than prevent adversaries from destroying AI infrastructure – we’ll also need to prevent them from stealing critical IP.

A widely-cited report by AI security researchers published in 2024 provides a reasonable starting point for understanding what it would take to secure AI model weights from theft by nation-state attackers. It defines AI systems requiring Security Level 5 (SL5) as systems that would become targets for “top-priority operations by the top cyber-capable institutions” like the People's Liberation Army's Strategic Support Force, China’s Ministry of State Security, and analogous institutions in other adversary countries. Superintelligence could offer those who control it a decisive strategic advantage, and would almost certainly need to be secured at the SL5 level. The recommendations at this level of security are extensive. They include:

- Strict limitation of external connections to a completely isolated network

- Routine rigorous device inspections

- Disabling of most communication at the hardware level

- Extreme isolation of AI model weight storage (completely isolated network)

- Advanced preventive measures for side-channel attacks (e.g., noise injection, time delays, and other tools)

- Formal hardware verification of key components

To quote one former Mossad cyber operative, achieving the proposed SL5 standard would be “really hard. Even people who’ve read the report and understand cybersecurity underestimate how hard this would be.” In practice, SL5 would require U.S.-made components, or highly trusted components that the Department of Defense or the Intelligence Community (IC) would be comfortable using for extremely classified operations.

But as we mentioned earlier, many of the components in current AI clusters are sourced directly from China. As one cyberoperative put it, current clusters are, “not even close to being close. No one is even thinking about this. This is not a priority for them. [...] AI companies have funds, but not for this; people who are smart, but not on this topic. It’s just not in their skillset. [...] Government could do this, sure, but this is a big project; this is a mega-project. I’m not [aware of] ultra-secure projects that would be this size, and require this much specialized equipment at the drop of a hat.”

And the report’s recommendations aren’t even comprehensive: they leave out key security challenges associated with geographically distributed training, for example. They also only address the risk of model weight theft, and not attacks meant to disable or destroy computing infrastructure, which, as we’ve seen, would be extremely cheap and damaging under current conditions.

These aren’t long-term problems either. An AI security expert who collaborates frequently with frontier AI labs estimated that the next generation of AI models could already qualify for the SL4 threshold – defined in the report as security measures required to counter “most standard operations by leading cyber-capable institutions.” By his assessment, even SL4 security measures would take at least 1.5 to 2 years to implement under current conditions, requiring a “massive investment” from key players. This individual assessed that the shift from SL4 to SL5 security would also be significant, almost certainly requiring entirely new data center facilities built from the ground up – a perspective echoed by several other physical and personnel security experts we spoke with.[21]

Our interviews with energy and compute infrastructure specialists, intelligence experts, and special operations personnel have surfaced other critical challenges. For one, data center construction can take well over a year to complete, and data centers probably can’t be retrofitted to meet these criteria after they’ve been built. Similarly, the AI accelerators that will fill frontier AI data centers in 2026 and beyond are having their designs finalized. They will have to be redesigned to address accelerator-level vulnerabilities that will be relevant in the geostrategic and security environment in coming years, but which aren’t on leading players’ radar today. Absent unprecedented government incentives driving a major security push in the very near term, secure AI accelerators will not be available in time for a superintelligence project.

One key attack vector is side-channel attacks, which include things like Telecommunications Electronics Materials Protected from Emanating Spurious Transmissions (TEMPEST) threats. These are information extraction attacks that use leaked electromagnetic, sound, or vibrational signals to reconstruct sensitive and intelligence-bearing information.

It’s been argued that TEMPEST attacks would not be able to exfiltrate model weights themselves due to their limited bandwidth, but that they could be used to extract decryption keys or other metadata that makes model weights easier to access or reconstruct. However, our investigation suggests that TEMPEST technologies may be far more advanced than is widely recognized. **** ***** **** ** ***** **** *** ** ** *** ** * * ***** *** ** ** * ** ****** ** **** *** ** *** **** ** *** ** **** *** ** ** * **** *** *** ******* ** * *** ** ** ** ***** *** *** **** *** **** *** ** ***** * ****. We assess that it is plausible that some adversary nation-states have access to TEMPEST and other side-channel attacks that could allow for direct weight exfiltration under certain conditions.[22]

Although many are classified, some standard defenses against TEMPEST attacks include ensuring a minimum distance between HVAC conduits and sensitive equipment, using shielding and Faraday cages, implementing physical security control zones around sensitive areas, using fiber optic cables instead of copper cables where possible,[23] and air-gapping critical components. Many of these strategies have to be accounted for at the design stage of a new data center build; it won’t be possible to retrofit existing facilities to meet appropriate TEMPEST standards. Failing to do so would bake critical vulnerabilities into a government-backed superintelligence project.

Recommendation: At the design stage, and throughout the build, new data center projects aiming to support training and inference for frontier models by the end of 2025 should work directly with intelligence and national security agencies to identify and mitigate vulnerabilities from TEMPEST and other side-channel attacks. This work should also involve current or former frontier AI researchers, hardware design specialists from leading companies like NVIDIA/AMD, and data center infrastructure design experts.

Only the U.S. national security and intelligence communities, with support from private sector experts retained at leading chip design, data center, and AI model development companies, are in a position to estimate the actual extent of the TEMPEST and cyber threats that a superintelligence project would face. And this holds for vulnerabilities beyond TEMPEST attacks too: there almost certainly exist highly classified methods, technologies, and tools available to adversary nation states whose capabilities will have to be accounted for from the earliest design stages of a superintelligence project.

There is no other option: the IC and national security community need to be involved in project design and in the design of its supporting infrastructure – including of the AI accelerators themselves – from the very beginning. As one former military security specialist put it, “I wouldn’t consider [AI accelerators nation-state proof], unless the NSA or CIA is involved in designing them.”

Software supply chain security

The software stack that frontier AI labs use, and that runs on AI data center infrastructure, is insecure. According to a cybersecurity specialist with experience executing nation state cyber operations, “There are at least several incidents you could point to with libraries that are used by leading labs that have been compromised in the past, whether by the intelligence communities of other countries or accidentally.” In addition to these known vulnerabilities, nation-state adversaries have almost certainly identified zero-day vulnerabilities.

In this respect, the CCP is one among many potential threat actors. Although China’s physical access to sensitive supply chains makes it the leading threat actor along that vector, Russia has shown itself to be highly capable in the cyber domain. And while Russia lacks the AI hardware and talent it would need to train frontier AI models, they may have enough infrastructure to run a frontier model if they steal one in a cyber operation. In a world of compromised frontier lab security, cyber powers are AI powers.

No one is doing this, no one is looking into this, no one is trying to create an end-to end secure software stack that is secure – not just in quotation marks – but genuinel secure.

These challenges create a dilemma: nation states aren’t pricing in the military implications of superintelligence, which makes it difficult to justify investing in basic security measures today.

But lead times for energy infrastructure components are measured in years, chip design cycles are even longer, and security features can’t be retrofitted into hardware, so delay is not an option. We can either place a bold bet on securing our critical AI infrastructure today, or find ourselves racing the CCP to superintelligence with an easily-penetrable hardware and software stack.

Recommendation: There are lists of software libraries that are approved for use in military systems at various levels of classification.[24] We should open up and expand this process for deep learning-supporting software libraries used by frontier labs, and resource it so it can move as fast as it needs to. This probably also requires collaboration between the research engineers and the accreditation orgs, as well as the involvement of the intelligence community. The speed of this review process should be explicitly balanced against the need for rapid development, with the understanding that a single critical breach could cause the project to fail.

Energy security

Energy production is an often-cited bottleneck to frontier AI development, and increasing high base load power availability on the U.S. electric grid should be a top national security priority. We won’t rehash the details here since there are many public reports on this already, other than to say that our interviews with energy specialists suggest that new natural gas plants capable of supporting hundreds of megawatts currently take 5 to 7 years to set up, but could be built in just over two years – quickly enough to support training runs of the 2027–2028 era – if permitting and supply chain bottlenecks were removed.[25]

The speed at which new natural gas plants can be brought online (assuming we remove regulatory frictions) makes them a particularly promising way to support a U.S.-backed superintelligence project in the near term. Other options like nuclear and geothermal matter more on longer timescales.

Gas turbines are already sold out across the market, and other components are on long-term back-order. This won’t be fixed in time to make a difference for the training runs of the 2027 era. U.S. government policy can move the needle here though, as we’ll explain below.

From speaking with cabinet officials in U.S. states, a less recognized problem is the time cost of litigation over environmental concerns. Litigation creates a huge bottleneck to new power and infrastructure build-outs. Strategic economic interference by China is an important factor here. We’ve heard from former counterintelligence and special operations personnel that Chinese entities are explicitly funding and leveraging domestic environmental groups to tie up data center and other critical national security builds through litigation. The environmental groups involved in this work are not generally aware of the ultimate source of their funding — the funding is often indirect and funneled through proxies and surrogates — but effectively operate in alignment with adversary interests.

Recommendation: We know it’s possible to build massive amounts of power generation quickly — up to 3-5 GW or more[26] — as long as we’re willing to ignore carbon emissions concerns in the short term and just set up huge gas turbines.[27] In terms of energy, we’re more bottlenecked by our own regulations than by economics or physics. In practice, we could pick a site for a project, exempt it from regulations, and probably build as much power as we need there quickly. To help resolve supply chain problems, **** *** *** * *** ** ** **** ** ** ** ** **** ** ** ** ** *** *** ** *** **** ** ** ** *** ** *** * *** *** **** *** *** *** **** *. Over time we could even phase out gas turbines and replace them with lower carbon sources, but there’s no need for that to delay other parts of the project.

Deregulation is essential, and plenty of people are already discussing it. Environmental regulations are a particularly big problem here, and the President could invoke the DPA Title III to provide NEPA exemptions[28] to advanced AI clusters and related dedicated energy infrastructure that meet various security requirements. We also need categorical exclusions to NEPA for no-impact preliminary activities on these data center projects, like design, drilling boreholes, and collecting soil samples. This would unlock early DOE financing through loans.

We should direct the creation of limits on litigation options for designated critical infrastructure projects, and in particular, expedited court decisions. Every delay mechanism that could be used to tie up new data center and related energy projects should be bounded.

Export controls

Just as we need to ensure robust supply chains for our own efforts, we need to deny the same to our adversaries. While U.S. GPU export controls have strengthened over the past few years, Chinese firms are known to have subverted controls to acquire controlled chips manufactured and packaged by TSMC by simply spinning up subsidiaries and shell companies that don’t appear on entity lists. To take just one example reported by SemiAnalysis, leading Chinese fab SMIC operates two factories linked together by a wafer bridge, which effectively makes them a single facility, yet they were treated as separate entities under then-current U.S. export control policy — one was included on the U.S. entity list, and the other wasn’t.

Recommendation: We’ll have to move from entity blacklists to selective entity whitelists, significantly limiting the access that photolithography and optics companies, chip fabs and design firms currently have to the Chinese and other markets. We’ll also need to pursue less targeted export bans: current highly targeted approaches aimed at restricting chips based on narrow technical specifications still allow China to import significant volumes of strategically critical AI processing hardware. Recent updates to export control rules have tried to address this problem, but have left major loopholes.[29] China has repeatedly demonstrated their ability to integrate and use heterogeneous and legacy hardware into significant training clusters, and it is unlikely that even the best-informed technical assessments available to the BIS (the U.S. office that manages export control compliance) will be able to anticipate the workarounds they’ll develop to any set of narrowly scoped controls.

AI hardware security

A national superintelligence project would need to secure a lot of different supply chains, or *** *** ** **** *** ** *** **** ** * * ***** *** *** **** **** **. But in addition to taking a “wide” view, and solving for security across many devices and pieces of infrastructure, it would also have to take a “deep” view, and consider security exposure from geographically distributed supply chains for logic and memory. If the project depends on chips that are being made in Taiwan and South Korea, then securing and defending TSMC and SK Hynix becomes America’s de facto responsibility. All it takes is for the CCP to hack into the right network and modify the firmware that goes on fabricated chips, and the project can be compromised.[30]

In terms of AI GPUs — the specialized processors that actually train and run AI models — an obvious big concern is that most AI GPUs are made by TSMC in Taiwan, and that China claims Taiwan as its own. There’s a long history of illicit technology transfer from TSMC to mainland China, and TSMC is likely heavily infiltrated by CCP spies and saboteurs.[31]

There are a lot of hard steps involved in building a GPU, and most of those steps happen at TSMC facilities in Taiwan today. Two of the most important are fabrication and packaging. Fabrication is where you use lasers and chemicals to etch the tiny circuits into the chip[32] that implement its logic. Packaging is where you combine chips together on one board in such a way that they can talk to each other and with other components like memory and power.

We could fabricate quite a lot of AI chips in America today if we wanted to. TSMC’s Fab 21 in Arizona alone likely has the potential to produce about 11.5 million H100-equivalents per year today[33] (a type of GPU),[34] which is already much more than you could power in a 5 GW cluster.[35]

The problem today is packaging. Right now TSMC’s entire packaging capacity for AI GPUs is equivalent to about 11 million H100s per year,[36] although they’re expanding that quickly. Unfortunately almost all of that packaging capacity is located in Taiwan itself.[37] Intelligence experts have warned us privately that there’s a significant amount of low grade sabotage being conducted by adversaries on U.S. soil today, but it’s even easier for those adversaries to disrupt or block supply chains that originate in Taiwan — and harder for us to harden them — than if they were based in America. In the event of a CCP invasion of Taiwan, packaging capacity constraints – in addition to the collapse of logic die fabrication capacity – could quickly become critical.

Upstream of TSMC, there are also vulnerabilities at the level of the photolithography supply chain, which makes critical components TSMC uses to etch nanoscopic circuits onto AI chips. That includes the ASML/Carl Zeiss complex of companies that manufactures the machines that use intricate ultraviolet light sources and optics to pattern circuits onto the chips, and the specialized optical components that go into those machines.

Although it can take years for a new generation of photolithography machines to start being used for high-volume manufacturing of leading edge chips, existing photolithography machines are so complex that they need a full-time team from ASML just to maintain them in good working order. That means adversaries who wanted to limit our access to advanced AI chips could also try to do it at the level of ASML — although that scenario matters most if we expect to achieve superintelligence later than 2027 or 2028.

Recommendation: We should prioritize localizing CoWoS and CoWoS-like packaging, including CoWoS-L and CoWoS-R, in the United States, possibly by extending purchase guarantees to domestic companies like Intel and Amkor.[38] We also need to make sure that the components needed to manufacture chips, such as photolithography machines, are secured. This could involve closer collaboration between the United States and Dutch, Japanese, German, and other governments on counterintelligence, counterespionage, and countersabotage.

We also need to accelerate work on on-chip security technologies like confidential computing at scale, which are going to be critical to any effort to secure frontier model weights. We should be funding and incentivizing chip fabs and design firms to do that work directly, and engaging national security agencies in pinning down the security features that are most badly needed based on the current threat landscape.

A key priority also needs to be establishing *** *** *** * * ** ** *** ** ** *** ** *** ** * ** ****** ** ** * ** * ** * ** ****** *** * ** * ***** * ** **** *** ** * *** ** * **** ** * *** ****** **** * * ** ***** * ** * **** *** *** ** **** *** *** * * ** ** *** ** ** *** ** *** ** * ** ****** ** ** * ** * ** * ** ****** *** * ** * ***** * ** **** *** ** * *** ** * **** ** * *** ****** **** * * ** ***** * ** * **** *** *** ** * *** *** *** * * ** ** *** ** ** *** ** *** ** * ** ****** ** ** * ** * ** * ** ****** *** * ** * ***** * ** **** *** ** * *** ** * **** ** * *** ****** **** * * ** ***** * ** * **** *** *** ** * *** *** *** * * ** ** *** ** ** *** ** *** ** * ** ****** ** ** * ** * ** * ** ****** *** * ** * ***** * ** **** *** ** * *** ** * **** ** * *** ****** **** * * ** ***** * ** * **** *** *** ** * *** *** *** * * ** ** *** ** ** *** ** *** ** * ** ****** ** ** * ** * ** * ** ****** *** * ** * ***** * ** **** *** ** * *** ** * **** ** * *** ****** **** * * ** ***** * ** * **** *** *** ** *

To be clear: some of these infrastructure pieces might not matter at all if we reach superintelligence within the next two years or so. If we get superintelligence that fast, then we probably have enough AI chips in the United States today to train a superintelligence already. And that means that ensuring a domestic supply of AI chips today matters somewhat less, although denying AI chips and developing retrofittable secure computing add-ons (e.g. flexHEG) still matter a lot.

Finally, if all this turns out to be overblown and we’re actually decades away from superintelligence, it’s important to realize that all these infrastructure expenses won’t have been wasted. Even if AI never gets any better than it is today, there is already massive demand for AI inference that can be served more cheaply by bigger data centers, more chips, and more power. At worst, we end up in a situation similar to the dot-com bust, where the fiber optic infrastructure that got built during the boom ended up driving the growth of the modern Internet.

AI model developer security

Frontier AI models are trained and deployed on data center hardware. But the algorithmic insights, data collection and preparation procedures, and other critical conceptual breakthroughs that make those AI models more powerful are developed and honed inside the frontier AI labs themselves. These AI labs are tempting targets for nation state espionage. Most of them are also very poorly secured.

The conceptual insights that frontier AI labs are developing today will be used in the AI training runs of the 2026 era and beyond. Current and former frontier lab researchers we interviewed generally believe that some individual algorithmic insights could allow adversaries to train frontier AI models ten times more efficiently — potentially unlocking billions of dollars of efficiencies for very large models[39] — and that those insights can be communicated in a ten-minute conversation between experts.[40]

As with the physical supply chain for frontier AI we discussed earlier, the decisions we make about AI model developer security today will shape our adversaries’ capabilities in 2026 and beyond. But right now, frontier labs can’t make the right decisions about security because they aren’t receiving regular threat intelligence updates from the national security and intelligence communities.

Recommendation: As a first step, the U.S. government should share threat intelligence updates with frontier AI labs on a regular basis. It should also develop a mechanism to quickly declassify information that could contribute to labs’ security postures. Right now, the U.S. government lacks situational awareness about the true extent of labs’ frontier capabilities, but frontier labs also aren’t read in on the full range of threats they currently face.

On the other hand, security measures can stifle progress and reduce R&D velocity. Falling behind the CCP is just as bad whether it’s because our labs’ security is so poor that their proprietary advances can be stolen, or because we hamstring our own researchers with security protocols that weigh them down unnecessarily. We’ll need to invest in convenience tooling for ultra-high-security AI development that minimizes the workflow disruptions introduced by new layers of cyber and hardware-level security that we’ll have to introduce to the advanced AI R&D stack. This work should be led by the private sector, but supported – and potentially subsidized – by the U.S. government. We won’t go into details here, but there are mechanisms through which this could be done efficiently, and quickly enough to get workable prototypes online within the next 12 months. We’re happy to brief these in person if requested.

Personnel security

Personnel security at the frontier labs is a major challenge. The Chinese Ministry of State Security (MSS) targets ethnic Chinese as a matter of highest-level national policy,[41] and routinely exploits them as a source of intelligence. It does this by applying systematic pressure to individuals via their family, financial, and other ties to the mainland, to encourage them to collect and report commercial and other intelligence. This is of direct concern to U.S.-based frontier AI research and development: according to a senior researcher at a leading U.S.-based frontier AI lab, “In terms of percent [of frontier lab researchers] that’s foreign born, it’s well over 50%. [...] A pretty high portion of lab employees are [specifically] Chinese nationals.”

CCP espionage activity and penetration in the United States — particularly that target Chinese nationals — is extensive and highly systematized. For example, a former senior national security executive shared the following story: “While I was at *** ***** there was a power outage up in Oakland that affected Berkeley. There was a dorm that lost power and most of the campus lost internet connection. And the RA of the dorm ended up talking to one of our CI activities and all of the Chinese students were freaking the hell out because they had an obligation to do a time-based check-in, and if they didn’t check in, like, maybe your mom’s insulin medicine doesn’t show up, maybe your brother’s travel approval [doesn’t come through]. [...] The fidelity with which they control their operatives is super high.” The impact of this kind of activity on AI is direct. A former senior intelligence official we spoke to told us that there are non-public cases in which unpublished Google research has been acquired by the Chinese, and made widely accessible to university students in China, for example.

According to intelligence professionals with direct experience countering these activities, the CCP applies pressure through a well tested escalation playbook: from what we’ve been able to gather, this typically starts with minor punishments such as limiting healthcare or career opportunities to relatives on the mainland, and ratchets up systematically to confiscation of personal assets, imprisonment or disappearance of relatives in China, and physical intimidation on U.S. soil using infrastructure they’ve established for that purpose. The uncomfortable truth is that the CCP applies this pressure not just to their own citizens, but also to ethnic Chinese abroad – including some U.S. citizens of Chinese origin – particularly if they have family or financial ties to the Chinese mainland. Of course, Chinese researchers have been invaluable to American tech for generations, and to frontier AI in particular today — and their contributions will continue to help America compete with the CCP. But any American superintelligence project must be designed in a way that recognizes the immense leverage that the CCP can exert over researchers whose loved ones or assets are located within China’s sphere of influence.

The United States will face significant challenges in addressing insider threats from the CCP and other well-resourced actors. These will include constitutional roadblocks, and the fact that some of the most technically capable talent employed by U.S. firms may itself be compromised. That forces a direct trade-off between security and technical bench depth at all levels of the AI supply chain. We will need to put in place stringent security and counterintelligence measures if we’re going to stand a chance of limiting critical IP leaks to the CCP and other adversaries. These measures will probably reduce development velocity, and their impact will be felt most acutely within frontier AI labs themselves.

Frontier labs face strong incentives to prioritize speed over security. This is where Silicon Valley’s edge has come from, historically: competitive pressure and rapid, open iteration has propelled American tech forward in an environment with capped downside risks (at worst, a company folds), and near-unlimited upside potential. In that setting, even labs and researchers who consider themselves security-conscious relative to their peers can significantly underestimate the CCP’s motivation and capability to extract sensitive IP.

The result of all this is a deeply rooted culture of bad personnel security. Real personnel security for a project developing potential WMD capabilities looks like intense vetting scrutiny, including detailed inquiries about family locations and extensive travel histories, all rigorously cross-validated. It looks like continuous monitoring of personnel and their communications, employee atmospherics and trends, up to and including handing over personal phones and laptops for review. It calls for insider threat programs, active surveillance, and other privacy- violating activities. These things slow down research and frustrate researchers. But they are some of the basic personnel security procedures that would apply to any government-backed project expected to produce WMD capabilities.

These security measures are all the more critical in Silicon Valley’s AGI scene, where naive researchers recommend and hire based on (often publicly legible) social networks and with little to no accounting for national security factors. The social fabric and culture of Silicon Valley’s leading AI labs makes them particularly vulnerable to CCP infiltration and HUMINT gathering. We’ve seen firsthand how freely sensitive information flows in these circles: our team — at the time, independent researchers — were receiving updates on proprietary frontier AI training runs as far back as 2020. Leaks remain extremely common.

Recommendation: A national superintelligence project will have to make hard choices about who to include in its security umbrella, and about what to do with AI researchers and engineers who don’t pass the security bar. There are executive authorities and other tools that can help with this, *** ** ** * *** ** * *** ** * ** **** ** * ** *** ** *** **** *** * * **** ** ** *** *** *** *** * * * (we can provide in-person briefings if requested).

A large fraction of the world’s best AI researchers come from abroad, so we’ll have to find a way to vet, clear, and hire top talent from other countries. Aggressively expanding the Limited Access Authorization program – which helps U.S. national laboratories hire foreign talent – could be a way to do this.

We’ll also need a security clearance process that’s better than the U.S. government’s ordinary clearance process. Multiple national security and intelligence professionals have told us that the existing process for Top Secret clearances is too slow and in any case fails to leverage all the information it should — even failing to use unclassified information from OSINT, ADINT, and commercially available sensors.[42] The key question then becomes how the government can certify these private sector clearances and link them to access and participation in the project. This is probably doable through an executive order, which should also authorize frontier labs to hire and let go of employees on the basis of these background checks (to avoid wrongful termination lawsuits).

The personnel risk here isn’t just about securing model weights from theft, or even protecting data center facilities from sabotage. As we mentioned above, there are algorithmic trade secrets that can be conveyed in a ten minute conversation between experts, and that if implemented can save billions of dollars and months of experimentation on the path to superintelligence.

We already know from dozens of sources that the current state of security at frontier labs is not up to these challenges. But a former defense official with extensive counterintelligence experience shared his assessment with us that all these labs are almost certainly severely penetrated by the CCP. He added that if legislators fully understood the potential for compromise of these labs by foreign nationals, there would immediately be a much stronger push for tighter controls. In our assessment, we should assume that the Chinese government knows all the algorithmic secrets that all U.S. AI labs know today — those secrets clearly aren’t hard for them to steal under current conditions — and that those secrets have been disseminated to their own national AI champions.[43] But that’s a bad reason to keep leaking all the algorithmic insights that we’ll develop in the future too. We’ll have to clear any personnel working on the project, and turn to a combination of public and private solutions to improve that clearing process, as discussed above.

Cybersecurity

Cybersecurity is another core challenge for a superintelligence project. The software libraries leading AI labs use have been compromised in the past, and frontier AI researchers depend heavily on insecure open-source software to move as fast as they do. Software development tools, including the coding assistants researchers use and the data they’re trained on, are potential attack vectors as well.

During our investigation, we spoke to one former OpenAI researcher who claimed to have been aware of several critical security vulnerabilities, including one that allowed users to perform ***** ** ** ****** **** **** **** * ** **** ***** * ** **** ******* ** ** **, such as granting access to the weights of the lab’s most valuable AI model — their crown jewels. The researcher was later also made aware of an additional vulnerability discovered by red teamers that had been logged in the lab’s internal documentation and Slack channels, but left unaddressed. By his assessment, this second vulnerability would have allowed any employee to exfiltrate model weights from the lab’s servers undetected. Together, these two vulnerabilities made it possible to (1) access; and (2) extract some of OpenAI’s most sensitive IP, worth billions of dollars. He told us that these issues had been flagged for security teams and management, but that they went unaddressed for months.[44] To this day, he has no idea whether a fix has been implemented.

According to several researchers we spoke to, security at frontier AI labs has improved somewhat in the past year, but it remains completely inadequate to withstand nation state attacks. According to former insiders, poor controls at many frontier AI labs originally stem from a cultural bias towards speed over security. This is a major challenge we’ll face in securing AI labs for a superintelligence project. Most AI labs have a deep cultural aversion to anything more than token personnel or cyber security measures – an aversion that’s sometimes seeded and reinforced by commitments to transhumanist or other ideologies.

It’s worth underlining what happens when an adversary steals cutting-edge AI model weights. If an adversary steals the weights of a model you’re developing, any lead you had in base model pretraining[45] instantly goes to zero — or even negative, if they have the benefit of your discoveries in addition to their own.[46] (Code and training techniques can be just as critical, at least until AI systems are doing most of our AI R&D work themselves.)

Recommendation: The NSA should be working directly with all frontier labs to accelerate their software and cyber security, alongside private sector red teams. This should include very robust pentesting activities. Ideally, it would also involve support from private security firms with experience securing sensitive national security sites.

People have already started working on determining what it would take to stop high-capability adversaries from stealing model weights. We won’t rehash the details here, other than to say that while these efforts are commendable, they focus mostly on preventing theft and not denial attacks[47] (like drone strikes, missile strikes, physical sabotage, or certain cyberattacks). And so far, the IC and broader national security community haven’t taken a leading role on this; that will have to change quickly. The most significant vulnerabilities that data centers and other critical AI infrastructure have to nation state-level attacks can only be identified and mitigated by intelligence, defense, and national security agencies with access to sensitive data, as well as deep domain expertise and experience carrying out and defending against adversary operations.

If we only recognize the need for a superintelligence project relatively soon before superintelligent AI is achieved, then we’ll have no choice but to urgently retrofit key facilities to higher levels of security. In that scenario, we’ll need especially deep and ongoing integration between U.S. national security agencies and the teams involved in the retrofitting process, because a lot of the tradeoffs between speed and security are extremely context dependent. If we have to make those trade-offs, we should make them deliberately, and they should be made by people who have full access to informed assessments of the strategic implications of various security and infrastructure design choices.

Finally, if our adversaries do manage to steal the weights of superintelligence-grade models, we’ll want to make sure that we maintain a decisive lead on centralized inference-time compute capacity. Even if adversaries exfiltrate critical model weights, we may still be able to achieve better performance than they can from the same model if we can dedicate more FLOPs to inference than they can.

Emissions security

In a context where multi-billion dollar technical secrets can be leaked in a five-minute conversation, IP security becomes a critical problem. Parabolic and laser microphones, piezoelectric sensors, and other surveillance technology can be used to eavesdrop on sensitive conversations easily. This is particularly true when espionage targets are in offices in densely populated urban areas, because adversaries can hide their surveillance operations in the city noise and disguise them as legitimate business or urban activity. A U.S. government-backed superintelligence project would have to move its R&D work — including algorithm development — to facilities that we can secure against sophisticated adversary information collection operations like these, and against the TEMPEST threats we mentioned earlier.

Recommendation: According to former special operations and intelligence professionals we interviewed, the most straightforward way to achieve good emissions security is to build new facilities in greenfield locations far from densely populated urban areas. But this introduces yet another trade-off for project efficiency and recruitment. Frontier AI researchers are used to the conveniences of life in e.g. downtown San Francisco, so some may not be inclined to join a project that requires them to relocate to remote locations and be subjected to close surveillance by national security entities. Having said this, many frontier AI researchers consider their work on superintelligence to be a calling rather than a job — and we suspect many of the best will choose to work even in an inconvenient, highly secure environment rather than be left behind. The AI labs and their researchers believe themselves to be at the center of a unique historical inflection, and that’s worth a lot of annoyance, inconvenience, and even pain to many of them.

AI control

A key objective of a national ASI project must be to develop AI-powered denial capabilities — *** ** * ** * *** ** *** *** *** — that would allow the United States to stabilize the geostrategic environment *** * *** *** * * *** ** * * *** *** *** ***** **. But an AI-powered denial capability is useless if it behaves unpredictably, or if it executes on its instructions in ways that have undesired, high-consequence side-effects.

That’s a live possibility. As AI developers have built more capable AI models on the path to superintelligence, those models have become harder to correct and control.[48] This happens because highly capable and context-aware AI systems can invent dangerously creative strategies to achieve their internal goals that their developers never anticipated or intended them to pursue.

For example: during a test of the cyber capabilities of OpenAI’s o1-preview model, it was given the task of recovering a text flag in a software container. However the software container didn’t start, because of an unintended bug. So o1-preview decided — unprompted — to use a network scanning tool to understand why. When it scanned the network, it discovered that its testers had made another mistake: they’d left an exposed Docker daemon API on the evaluation host machine. So o1-preview — again, unprompted — used the exposed API to break out of its container and spin up a new one, which it rigged to print the text flag it was after and win the exercise.

More and more cases like these have surfaced in the past few months.[49] Many of them are not public, and were shared with us during private interviews with frontier AI researchers.[50]

It’s unclear whether we’re on track to control superintelligent AI if and when it’s built. In private conversations, it’s unusual to hear AI alignment researchers estimate less than a 10% chance that we lose control of superintelligent AI once it’s built. More typical estimates range from 10-80%, depending on who you ask.[51] For many, those numbers are quite a bit higher than they were last year, as it’s become clear that the control problem hasn’t been solved despite significant leaps in AI capabilities.

Controlling a superintelligence might be hard under ideal conditions, but it’ll be even harder when adversaries get involved. As a former Mossad cyber operative warned, “The worst thing that could happen is that the U.S. develops an AI superweapon, and China or Russia have a trojan/backdoor inside the superintelligent model's weights because e.g. they had read/write access to the training data pipelines.” So an apparently successful American superintelligence project could produce a weapon that’s effectively under adversary control, or whose otherwise functioning control mechanisms fail unexpectedly and without warning.

In other words, control problems and security problems aren’t separable. We’ll have to develop robust control techniques and ensure the systems they're applied to are secure.

To be clear: despite the challenges, we’re optimistic that control can be solved well enough for the purpose of a project like this. Some of America’s best are working on solutions, and we can’t afford to let fear of failure hold us back in the context of a geostrategic race with the CCP. But we do have to be clear-eyed that there’s a real problem here: we shouldn’t base the design of a national superintelligence project purely on the hope that the current empirical trend towards increasingly unpredictable failures of AI systems will suddenly reverse, that the world’s top AI alignment researchers are just wrong, and that the theoretical arguments that point in that direction will turn out to have been unsound.

Recommendation: A national superintelligence project would have to make decisions about when to push forward with training runs that are forecasted to lead to significant weaponization capabilities, which could also come with higher loss of control risks. We’d probably want major decisions like this to involve sign-off from different leaders, each of whom would represent different aspects of the project – like its geopolitical/strategic, security, and control dimensions – in a way that’s balanced and fast.